Beekeeping and artificial intelligence

The Challenge

The Environment America Research & Policy Center says in recent years, beekeepers have been reporting they are losing, on average, 30 percent of all honeybee colonies each winter. This is twice the loss considered economically tolerable. The organization also reports that, similarly, wild bee populations are also in decline.

According to the Environment America Research & Policy Center, we rely on bees to pollinate 71 of the 100 crops that provide 90 percent of most of the world’s food. When looking at the various causes of bee hive failure, between 25% and 40% of the losses are due to queen failure. Other causes can be traced back to environmental risks, poor nutrition, parasites, and disease.

So, how do we learn more about bee hive health, bee behavior, and queen failure in an effort to limit future bee hive loss? SAS, a leader in analytics through innovative software and services, sought to answer such questions.

As part of its commitment to using data and analytics to solve the world’s most pressing problems, SAS needed to find a way to learn more about bee hive health in an effort to slow honeybee loss. By analyzing bee hives, we can gain further insight into actionable goals help slow their decline.

SAS’ objectives for monitoring and tracking bee hive health included the following:

- Understand bee hive health

- Measure and quantify aspects of the bees and the hive

- Provide insight into forage availability

- Determine vegetation bloom times

- Observe effects on bee behavior

- Detect important bee hive events

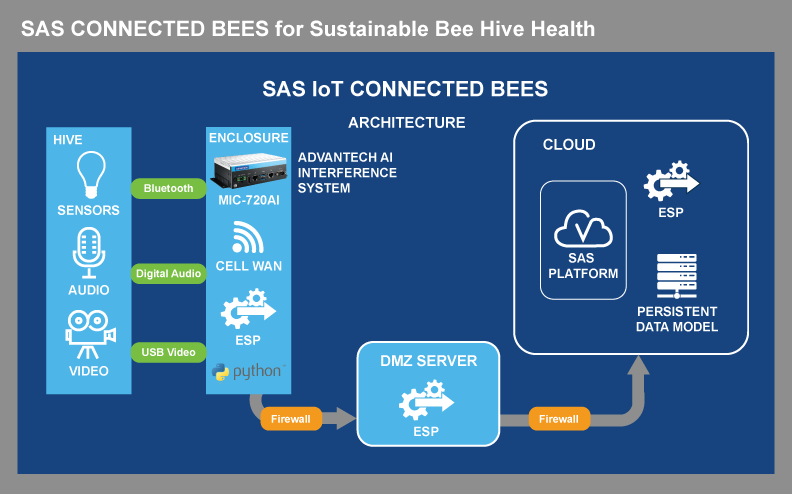

To accomplish these objectives, SAS had to effectively utilize Internet of Things (IoT) technology, such as machine learning, artificial intelligence (AI), and visual analytics. To get a complete picture of the health of the bee hive, SAS had to be able to collect, visualize, and analyze a variety of IoT data, such as:

- Traditional Sensor Data – weight, temperature, humidity in the bee hive

- Audio Streams from Inside the Bee Hive – acoustic analytics

- Video Streams from Outside the Bee Hive – computer vision

The Solution

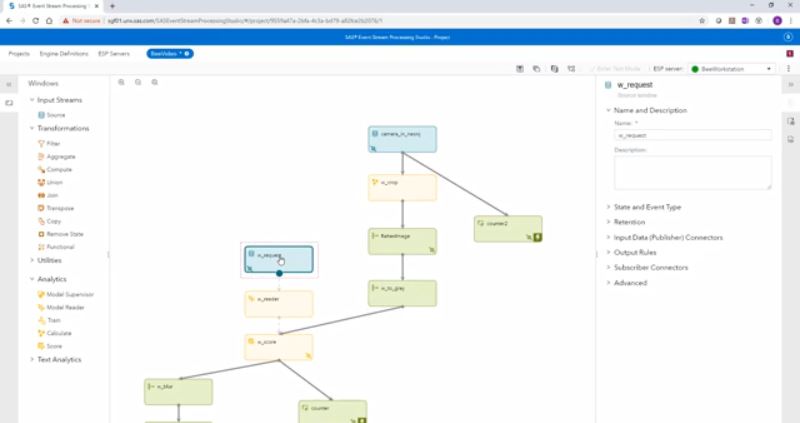

Sensors and an AI Inference System were used to collect the needed data from bee hives on SAS’ campus in Cary, N.C. They started streaming hive data directly to the cloud to measure data points in and around the hive, such as weight, temperature, humidity, flight activity, and acoustics. Machine learning models were also used to “listen” to the hive sounds, which can indicate health, stress levels, swarming activities, and the status of the queen bee.

SAS utilized the MIC-720AI AI Inference System from Advantech to help process machine vision data from the video streams outside the bee hive. The Advantech MIC-720AI is part of the MIC Jetson series, which is powered by the NVIDIA® Jetson™ platform.

|

|

|

With the MIC-720AI, you get all the performance of a GPU workstation in an embedded module. The device can withstand industrial-grade vibration and high temperatures, and has a modular, compact-sized design. For this bee hive monitoring project, the MIC-720AI was placed in a weather-resistant enclosure in the field and had to be able to withstand high temperatures and humidity.

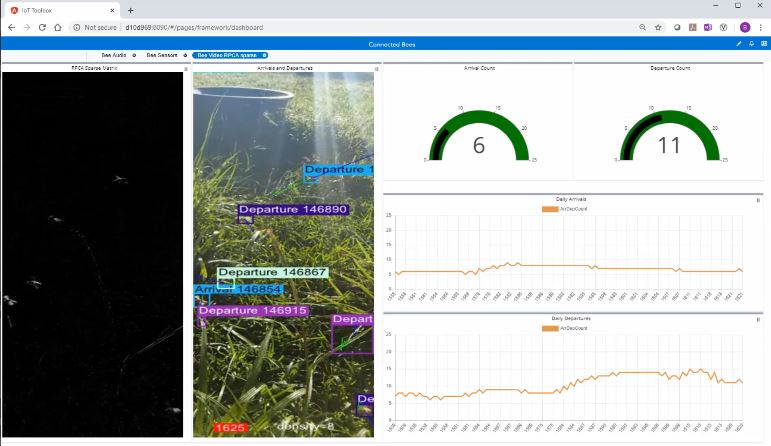

Due to the detailed and meticulous nature of the collected data—individual bees on video streams, auditory hum of the hive, etc.—the SAS team needed to utilize robust principal component analysis (RPCA), a machine learning technique. For example, RPCA helped to separate the images of bees in the foreground from grass in the background, even though the grass moves in the wind.

Data was then compiled in a dashboard for real-time analysis. Industrial-grade edge hardware, such as the MIC-720AI, allowed the analytics to be streamed directly at the beehive. This was extremely important for computer vision, where SAS was most interested in the results and not the raw video. By transmitting the results over a cellular network connection, it allowed for an efficient use of the connection.

Related Product

MIC-720AI

AI Inference System based on NVIDIA® Jetson™ Tegra X2 256 CUDA Cores

MIC-720AI is an ARM-based system integrated with NVIDIA® Jetson™ Tegra X2 System-on-Module processor. It provides 256 CUDA® cores on the NVIDIA® Pascal™ architecture and is designed for edge applications that support rich I/O with low-power consumption.

The system has 4GB LPDDR4 memory, 4K video decode/encode, 2 USB3.0, Power-over-Ethernet (PoE), and mSATA expansion. With wall-mount brackets and fanless design make it easy to install in rugged environments. This small embedded system is an ideal system for AI inference edge and deep learning applications.